Overview of Intervention

Samala Moyo (‘Care for your life’) is a museum-like interactive exhibition, which aims to engage people with health and health research that follow themes linked to the research of the Malawi-Liverpool-Wellcome Trust Research Programme (MLW). These include Malaria, TB& HIV, Non Communicable Diseases (NCD) and Microbes Immunity and Vaccines (MIV). Samala Moyo targets the health workers, medical researchers, media, students and community members in the surrounding communities through a permanent exhibition based in the grounds of MLW in Blantyre. The project also has an outreach component, which targets school children in upper primary and secondary schools and community members in the 3 Districts of three districts of Blantyre, Chikwawa and Chiradzulu

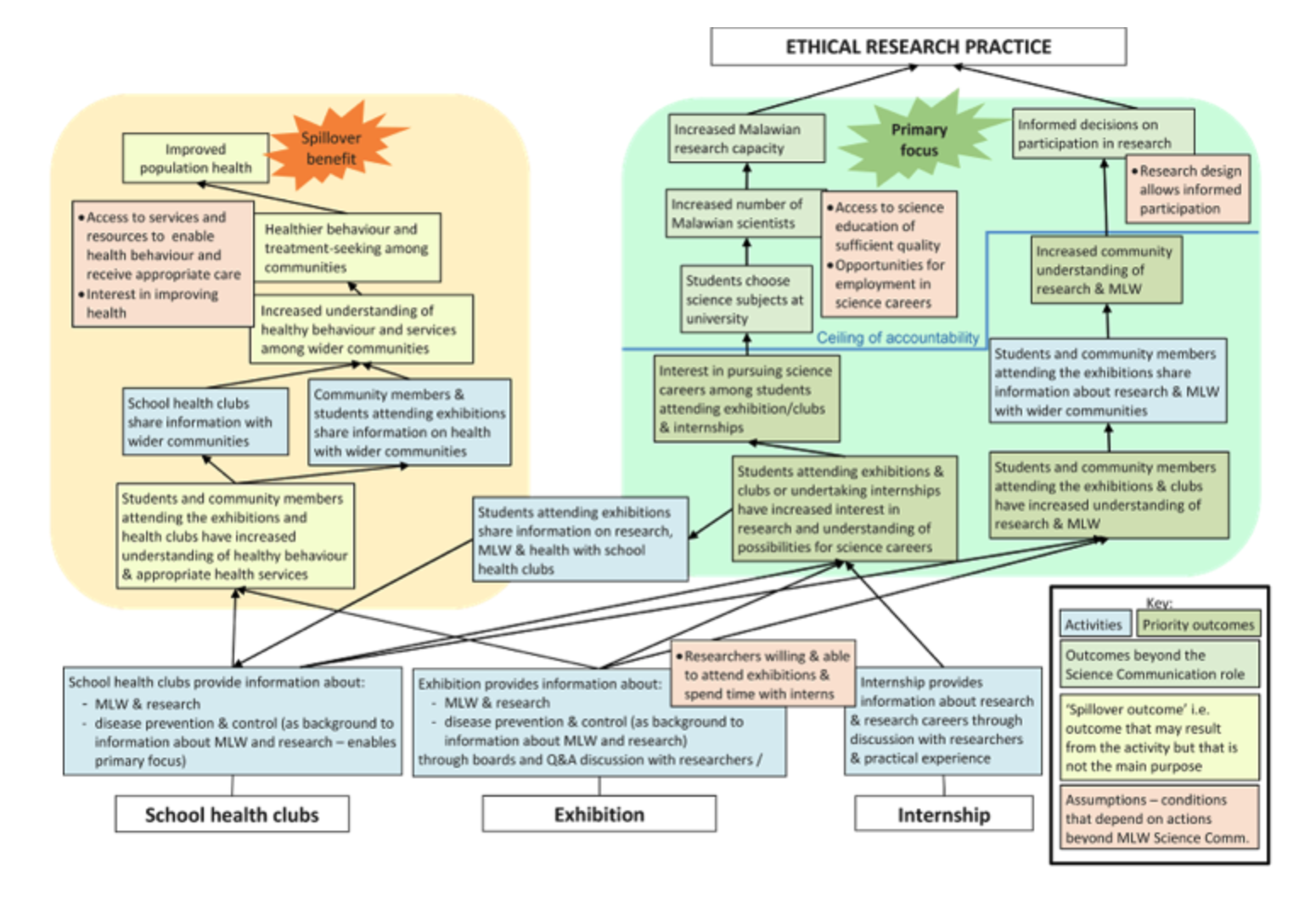

The project has used focus group discussions, exhibition games, teacher’s evaluation forms, plenary discussions and student’s video diaries as evaluation tools. The team is thinking of initiating a theory of change approach in which they will evaluate the project at every stage of implementation to explore how each step contributes to meeting the project objectives. This approach, which is grounded in theory is new for the project team. So far this framework has demonstrated there are some goals that are beyond the power of MLW to achieve alone and that the contribution of other players like the Government is important. The team has also become aware that some of goals are overly ambitious and that long term planning and follow up is needed to see whether objectives are achieved and attributable to the project activities.

MLW hope to learn from implementation of the theory of change approach which will share with other Wellcome Trust major overseas programmes.

The below summarises the discussion between the presenter, discussant and the rest of the room about the case study presented.

The Engagement Interventions- Key points

The group discussed the exhibition project as more than one single activity. Instead, it was though of as a set of multiple interventions with an exhibition at their core.

These interventions need to be described carefully; What are the interventions? How do they relate to each other? And how do they change over time?

The latter of these, is particularly important when evaluating engagement activities and interventions. The ways of working in engagement are, and should be, responsive. So interventions should be expected to change and capturing this change is very important. The evaluation methods chosen were applied within a Theory of Change framework, which will be described in further below after a presentation of the evaluation methods. Using a theory of change seeks to make assumptions clear and progressively explore them, rather than try to stick to an initial design, embracing the changing nature of engagement interventions and allowing for capturing that change.

The Evaluation Methods

Innovative methods

The current programme of work uses some standard methods of evaluation, like focus group discussions and self-administered questionnaires, but also more innovative methods; like, games, video diaries and participatory video. It is hoped that these methods make the engagement and evaluation more fun, so more appealing to participants, and, in some cases, provide more in depth responses. These methods are also thought to level out power relationships between practitioners and participants, which are often present in health engagement work, permitting a more honest exchange and strengthening both the engagement and the evaluation.

Resource

In this case, there were 18 focus group discussions (FGDs), which take a lot of time and resource. Often, evaluation activity is resource heavy but we need to consider carefully which methods to prioritise, and how essential they are to get where we need to be. Would there be value in doing fewer focus groups and more light touch methods?

The Theory of Change approach used also requires a significant time investment, although, as found by MLW in this case, the more Theories of Change are developed within a programme, the quicker they become to do for other interventions in the programme.

Duality of evaluation methods

48 FGDs is an impressive set of activities, in which the evaluation team and community participants are interacting. Depending on how interactive the FGDs are these may be considered engagement activities in themselves. All social interactions influence everyone involved, especially when it comes to more interactive and participatory methods like participatory video. These are not to be underestimated as “just evaluation activities” but as part of the engagement interventions themselves.

When planning evaluation methods, we should think about justifying them carefully in relation to our goals and the resource required to deliver them. We may need to be conscious of whether our chosen evaluation methods might also be engagement interventions. This is important as the theory of change we use to frame our evaluation should be sensitive to both our core engagement activities and these subsidiary activities. It may be fruitful to consider how the evaluation interventions would map onto the theory of change.

Evaluation Approach – The Theory of Change

As outlined above. The project team started to embed their evaluation methods within a theory of change. Here we present the theory of change (ToC) for the project to demonstrate the usefulness of the ToC approach when evaluating complex interventions like those used in community engagement with research.

A theory of change framework lays out activities, assumptions and different outcomes that might be expected. People who like logical frameworks for planning projects [http://www.betterevaluation.org/en/evaluation-options/logframe] may like ToC because it considers the pathways between activities and outcomes, allowing one to plan their evaluation approach carefully at the outset, and to attempt to attribute activities with outcomes. Those who like to explore emergent outcomes may also like the ToC approach [https://mesh.tghn.org/articles/theory-change-mesh-introduction/] because with ToC assumptions are made explicit which encourages you to re-examine these and make changes as you delve into the project. It is therefore, not designed to be a static framework but something dynamic which shifts shape iteratively throughout the project. Any assumptions made need to be made explicit from the outset, and added to over time, so that that evidence can be gathered to support or refute them in evaluation.

As it is a dynamic activity, rather than a static road map, comparing the ToC developed at the start of a project and the ToC that results at the end of the project can be a useful evaluation exercise. Articulating changes between the two ToCs and writing these up is a valid use of a ToC model, but the focus of the evaluation is usually on refining it as you go. The process of creating and refining the ToC allows you to understand the activities and why you are doing them. It is a process for practice as well as evaluation.

Risks and unintended consequences can also be anticipated and mapped in a theory of change, though in this case they were not as there was no time and the team had to prioritise what was possible.

Ceiling of Accountability

The ‘ceiling of accountability’ is a concept used within the application of the ToC method. It makes clear the point past which a project or programme cannot accept responsibility or claim credit for outcomes. Below the ceiling of accountability are the things that it is felt the interventions can influence, and it is these that the group will monitor. Above this, are wider goals that are outside the influence of the interventions but worth capturing and keeping sight of. In results based management this is called “attribution”.

Primary Focus Vs Spill Over Benefits

The spill over area in the diagram aims to capture things that are outside the primary goal of the project. It is not that they are not important but, so to keep monitoring manageable, they are not systematically tracked.

Linkages between the two areas are, however, obscure. It may, with more time and resource, be fruitful to map the dissemination of ideas between the students on each side and the communications between them.

Measuring Outcomes

The outcomes mapped are quite intangible, like “interest in pursuing careers”. There is a question of how you marry these with SMART indicators and objectives: Specific, Measurable, Attainable, Realistic, and Timely. As activities are aligned to the school curriculum it was suggested that something like test scores would be more SMART but there was a question of whether these are useful, whether they do actually measure what we are interested in and are, if they are indeed, feasible indicators.

How was the ToC developed?

The overall ToC for the MLW science communication department was developed in this case through a one day workshop. From this, four individual ToC for individual programmes, like the one presented here, were developed through meetings with community members.

The ToC was built based on practitioners’ wisdom, tacit knowledge, and hopes for the programme, not published evidence, but it will be evaluated using evidence.

There is a feeling that validating the ToC with stakeholders is very important; some FGDs will be held in a year’s time to review the ToC, to this end.

Evaluation Goals

Filling Gaps

The goals in the programme’s ToC seem to be focused on filling gaps in the knowledge of students and publics, however, researchers are not listed as stakeholders in the case study presented. There is an omission here with regards to what researchers will gain from the interventions. If we understand engagement as a two-way process, we should be looking wherever possible at where researchers might learn or gain from the engagement process and what the impact will be on them and the research itself.

This is explicit in evaluation work for some of MLW’s other engagement activities but because not as many researchers are involved in delivering the exhibition and its related interventions it has not been captured as a key impact for this one.

Defining Goals

Ethical research practice is very ambitious as a goal. There was a question of whether it is necessary to have a goal this ambitious. This is the overall goal of the science communication programme at MLW and is well defined in the departments overall ToC.

The areas at the bottom of the ToC might also be considered goals – discuss health issues, increased information on health – and map well onto the MLW programme aims.

Goals Evolving Over Time

Interventions change over time and ToC gives the possibility to rethink the goals as you go.

In this case, healthy behaviours has clearly emerged as a “spill over benefit”. If this is important and seems to be happening, regardless of whether it was an original intention of the interventions, perhaps the goals should be shifted to incorporate it. Shifting over time is good not bad in our field. This harps back to previous points on why ToC is a good approach, as it allows for change, and the importance of revisiting and shifting your ToC as the project develops.

KEMRI [link to KEMRI schools case study] provided an anecdote of when they went to evaluate their schools’ activities with the goal of improving school grades, and an assumed a linear process that would lead to this. But there are so many assumptions along the line – the quality of education, amount of interactions compared to the intervention you provided – so they suggest that we need to think if and how a one hour interaction in an engagement and/or evaluation activity can feasibly translate into a programme goal. Perhaps, rather than being constrained by a linear method of planning and implementation, we need to be more open ended; participatory methods of evaluation allow you to capture the less tangible “spill over benefits” that can be as important - if not more - than your original goals. For example, the aspirational effects of meeting scientists, or the enrichment that comes with being exposed to the richness of life, just as you might take a child to see dance or music. There is a risk when developing evaluation plans that we miss the outcomes that are just as important but not as easily measurable. Capturing unintended consequences and exposing them so that funders and decision makers see their value is not to be forgotten, nor underestimated.

This resource resulted from the March 2017 Mesh Evaluation workshop. For more information and links to other resources that emerged from the workshop (which will be built upon over time) visit the workshop page.

For a comprehensive summary of Mesh's evaluation resources, and to learn how to navigate them, visit theMesh evaluation page

This work, unless stated otherwise, is licensed under a Creative Commons Attribution 4.0 International License

Please Sign in (or Register) to view further.